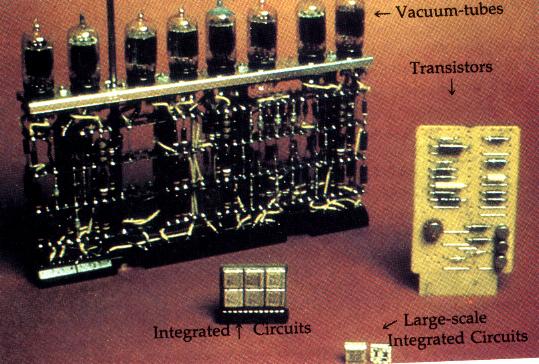

Although the dividing lines between the first three generations of computers are clearly marked by major technological advances, historians are not so clear about when the fourth generation began. They do agree that in fourth-generation computers the use of magnetic cores had been discontinued, replaced by memory on silicon chips.

Engineers continued to cram more circuits onto a single chip. The technique by which this was accomplished was called large-scale integration (LSI), which characterized fourth-generation computers (see picture below).

LSI put thousands of electronic components on a single silicon chip for faster processing (the shorter the route electricity has to travel, the sooner it gets there). At this time, the functions that could be performed on a chip were permanently fixed during the production process.

Ted Hoff, an engineer at Intel Corporation, introduced an idea that resulted in a single, programmable unit-the microprocessor, or "computer on a chip." He packed the arithmetic and logic circuitry needed for computations onto one microprocessor chip that could be made to act like any kind of calculator or computer desired. Other functions, such as input, output, and memory, were placed on separate chips. The development of the microprocessor led to a boom in computer manufacturing that gave computing power to homes and schools in the form of microcomputers.

As microcomputers became more popular, many companies began producing software that could be run on the smaller machines. Most early programs were games. Later, instructional programs began to appear. One important software development was the first electronic spreadsheet for microcomputers, VisiCalc,introduced in 1979. VisiCalc vastly increased the possibilities for using microcomputers in the business world. Today, a wide variety of software exists for microcomputer applications in business, school, and personal use.

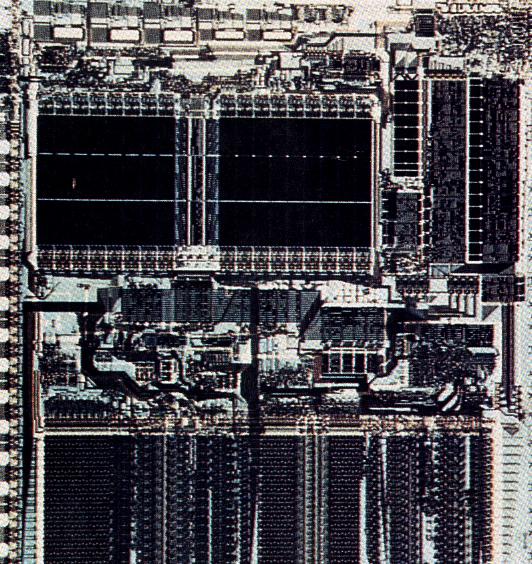

Very-large-scale integration (VLSI) has virtually replaced large-scale integration. In VLSI, thousands of electronic components can be placed on a single silicon chip. This further miniaturization of integrated circuits offers even greater improvements in price, performance, and size of computers (see picture below).

A single microprocessor based on VLSI is more powerful than a room full of 1950s computer circuitry.

Trends in miniaturization led, ironically, to the development of the largest and most powerful computers, the supercomputers. By reducing the size of circuitry and changing the design of the chips, companies that manufacture supercomputers were able to create computers powerful enough and with memories large enough to handle the complex calculations required in aircraft design, weather forecasting, nuclear research, and energy conservation. In fact, the main processing unit of some supercomputers is so densely packed with miniaturized electronic components that, like the early mainframe computers, it needs to be cooled and so is submerged in a special liquid coolant bath that disperses the tremendous heat generated during processing.

When will the fifth-generation begin? Unlike most computer processing today that mainly performs arithmetic operations, the next generation of computers will imitate human thinking and perform logically (i.e., will display artificial intelligence). In addition, experts predict that users will be able to easily communicate with these computers simply by using their native languages (e.g., English). Computer capabilities have improved drastically in the past forty-five years and there are a number of technological innovations on the horizon that could start a new computer generation. It will most likely be up to the historians of the future, however, to look back and identify one significant technological advance that thrust us into the fifth computer generation.

Last Updated Jan. 5/99